SiteDev Update: Comments Going To Moderation

We were informed by our new host earlier this week that we need to scale back our bandwidth use, upgrade plans (to one that costs 3x as much), or change hosts (again).

So I am implementing some things on the website to try to scale back our bandwidth. Early indications are that one of them is a lot of comments are going to moderation. We’re passing them through as quickly as we can. Sorry for the inconvenience.

If you see any other odd behavior, please let me know.

I will keep you posted

Possibly the sidebar “state of the discussion” is impacted as well?Report

That seems… likely. One of the changes is in caching.

I assume it’s not updating?Report

seems working now.Report

Weirdly enough I got a ‘your comment is awaiting moderation’ on a comment I just posted to the blitzkrieg post.

(eta – and this one too.)Report

Wednesday’s Media links has Trumwill’s twitter stream embedded at the end.Report

This could be a factor, also, it’s slightly discombobulating (says the person who Never Ever Wants an Interface To Change)Report

That was just a goof-up on my part.Report

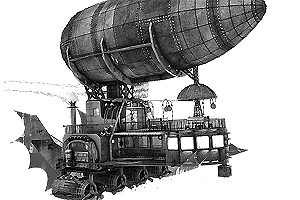

Would removing featured images help with bandwith? I tend to find they add little value to most posts (which isn’t a criticism to the authors as I know they are required).Report

Seems unlikely. They’re pretty small, and are cacheable. I suspect that the main problem is the fact that pages are constantly being updated due to comments, requiring cache invalidation.

One thing that strikes me as potentially being particularly problematic is the inclusion of the “State of the Discussion” sidebar in every page. I don’t have much experience with web design, so I could be wrong about this, but it seems to me that this means that a comment on any post will invalidate the cache for every post. I think it should be possible to pull that content out into a separate page that gets embedded into the main page on the client side (like the Twitter widget), so that the cache on any particular post will be invalidated only if that particular post gets a new comment.

But maybe that’s already being done in a way that’s transparent to me.Report

Heh. I’ve had to work on this a lot in my professional life, so let me randomly over-explain caching of web pages in case anyone cares, because I am bored:

(Note I have no special inside knowledge of what is going on here beside what I can see in my web browser. Not I also have no idea what level of knowledge you have, Brandon, but I’m trying to aim this at everyone anyway.)

I’m not sure if you’re talking about server caching or client caching. If you’re talking about server caching, this is WordPress, and CMSs like WordPress are specifically designed to cache parts of the page if you set them up that way.

Aka, the article part would be generated once, the comment part is updated whenever that is changed, the sidebar is changed when that is changed, and they are all shoved into the template in the correct places and sent out.

Joomla actually has the ability to switch between caching the entire page or in parts and you pick one, where I think WordPress comes with to partial caching and there are entire page cache addons you install. (I am not as up on WordPress caching options as Joomla.)

But that’s all server caching, and doesn’t save any bandwidth, just some CPU.

—

The only way to save bandwidth is browser (Or proxy) caching, however, there are problems. The HTML pages generated here are not cached at all on the web browser, and they mostly couldn’t be. The web browser can’t know someone else made a comment.

Which means the page has to be resent, or at least _requested_, each time, regardless of whether it has changed or not.

Now, that ‘requested’ is important. A web server, when sending a response, can add a specific header called a ‘Last-Modified’, which is the date the ‘thing’ was modified. If the response is a real file on the disk, like an image, it’s almost always the ‘last modified’ date from the filesystem. If it, like this web page, is a generated response (Basically a computer program written in PHP.), it would hypothetically be the date of whatever the most recent database record that ended up on the page was. (The newest comment, the newest sidebar, etc.)

This Last-Modified date is kept right next to the cached response in the web browser.

The web browser then sends that date back to the server when it needs to load the page again, and the web server can choose to say ‘The page didn’t change since that time, so I’m not giving it to you, use the cached one you already have’.

So there still is a request, but the response is much much shorter.

In theory, this could be turned on here.

And, indeed, the sidebar would be an issue there. Having a changing sidebar could mean ‘the page’ changes more often, so the server pointlessly sends an otherwise identical page with a slight change in it.

Of course, instead of embedding another web page in an iframe or something, it’s technically possible to just make the Last-Modified time based only off the latest comment timestamp, and not count when the sidebar changed. (I mean, this isn’t really a ‘file’, so WordPress is basically just inventing the modification time anyway, we can make it whatever we want.)

Which means people reloading the page might have their browser be told ‘page has not changed’ when the sidebar actually has. So if there are no new comments, they see their old cached page with the old sidebar, but that seems like a reasonable trade off.

—

Except, I just lied, none of that matters, because there’s no Last-Modified on the page here, and there’s good reason for that.

With PHP scripts, and in fact most dynamic web pages, figuring out if the page has changed is basically exactly as complicated as handing out the damn page. Most of the time, it involves regenerating the page entirely, and then comparing the generated Last-Modified to the one requested, saving no CPU at all.(1)

However, people then think, okay, sure, Last-Modified checking won’t save CPU. But not sending the page would still save some _bandwidth_, so, still a good idea?

The thing is, the HTML source of a web page is a rather small amount of the total bandwidth, and almost anything is better to spend time on. Hell, a lot of sites, this one included, don’t even compress the page as it goes out, because CPU is more costly than bandwidth.

So this site, and in fact most sites, don’t end up putting Last-Modified on the generated HTML.

Now, don’t get me wrong, for static files that physically exist on disk, Last-Modified works fine. Not only is the ‘last modified’ date already on the disk, it’s automatically kept up to date when the file is modified. (Duh) And on any modern OS, looking up whether or not a file exists results in the file dates, sizes, permissions, etc, all being loaded in memory, which means the web server gets the last modified date ‘for free’.

So for static files, Last-Modified works great and it’s dumb to _not_ use. For dynamic requests that the server generates on the fly, it works in theory, but is somewhat impractical in actual practice, in that by the time the server figure out if the dynamic page has been modified, the server is probably already holding the damn page anyway and might as well give it out.

1) Programming aside: Programmers often think, when learning this, ‘Oh, well, it should just do the all database lookups first, which would let it get dates, and then it can return Not Modified at that point, if none of that has been modified since Last Modified. Otherwise it can go on to build the page.’

Except that isn’t how WordPress, or any CMS, is structured at all to work, and no one’s going to restructure them that way, as it would be very hard to extend and also it would result in large memory usages as all the DB queries would have to sit in memory at once, and, in the end, the actual ‘make a page’ is basically trivial compared to the database lookup stuff, so the entire thing is pointless.Report

The thing is, the HTML source of a web page is a rather small amount of the total bandwidth

This is probably true in general, but I think sites like this are an exception. As an example, I just downloaded a copy of Saul’s “Putting on Airs” post (currently at 164 comments), and it’s 977kB. The large number of comments combined with the extensive boilerplate markup included in each comment really bloats the source.

And the source is basically the only thing that’s changing with any frequency. Even when I do a soft refresh, all the JavaScript and images are just loaded from my browser cache. On the other hand, it seems like that should compress very well (locally, it compresses to a 100k RAR), and unless I misunderstand what Chrome is telling me (again, not a web dev, so I rarely use the Chrome dev tools), it’s actually downloading 840kB for the main document, and 922kB in total (almost all of the rest is loaded directly from Twitter, so the source of the main document accounts for more than 99% of the bandwidth from OT).

If it were actually doing compression, I’d expect it to do much better than that. And if it’s not doing compression, it should download the full 977k, so I’m not sure what’s going on. Only thing I can think of is that maybe when I save a local copy, Chrome inlines some auxiliary files like CSS and JavaScript.

Anyway, if the site doesn’t have compression enabled, that might be some low-hanging fruit.

In retrospect, even putting aside difficulties to implementation, browser caching doesn’t actually help much if the primary use case is people actively refreshing for new comments.

the actual ‘make a page’ is basically trivial compared to the database lookup stuff, so the entire thing is pointless.

But doesn’t the actual “make a page” stuff involve database queries? At a minimum, I’d guess that building this page would require you to hit up the comment database, the post database, and the author database, and probably some other stuff that’s not quite as obvious. One small query to see if the page has been updated seems like a bargain if the answer is usually no. And why even go to the database? Just cache it in memory, and invalidate the cache when you touch something on the page. As long as you’re running WP on a single server, you’re golden. The worst-case scenario is that WP crashes and you lose all the stuff you had in memory—and have to regenerate it, like you would have had to anyway.

I used to be part of a group WordPress blog about ten years ago, and while I wasn’t doing the development, I remember that when our dev implemented login and personalization for regular users, CPU usage went through the roof because we couldn’t do server-side caching of generated pages anymore (because each user got a different version of the page), so I’m pretty sure WP does do at least some server-side caching of generated pages.

Anyway, I’m mostly just trying to reason from CS fundamentals, so I probably don’t know what I’m talking about.Report