Thursday Throughput: Chat GPT Is Not Your Research Assistant

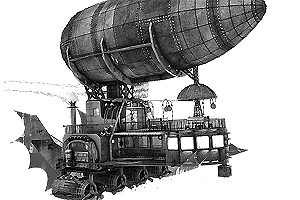

Photo by David James Henry, CC BY-SA 4.0

[ThTh1] I haven’t written much about the recent foofaraw over AI because … well, quite frankly, I really don’t know what to make of it. A lot of claims are flying around, few rooted in facts. I will, at some point, do the homework needed to comment intelligently on this. But things are moving so fast, it’s hard to keep up.

One thing I can say with great certainty, however: do not use Chat GPT to do your legal research for you.

The basic story is this. Two lawyers used Chat GPT to write a brief on behalf of a client. That brief made a good legal argument citing several relevant parts of case law. The problem was … and I don’t want to get too technical here … those cases did not actually, you know, exist. Chat GPT made them up. And now they are facing sanctions from a very annoyed judge.1

This has become a running theme with Chat GPT. It has a tendency to very confidently say things that are not true and cite sources that are fictional. I discovered this early on when I asked the program why astronauts float in space and it gave me a very well-worded incorrect answer (“there’s no gravity in space”). Something has apparently been fixed because, before I wrote this, I asked again and it gave the correct one (“astronauts are falling around the Earth”).

This is because Chat GPT is not really an AI; it’s more of a predictive text generator that tries to summarize what is in its knowledge base, tempered by the biases of its programmers. The latter sounds bad but it’s actually important because past chatbots have been tricked into spouting racist gibberish.

So why would this predictive chatbot lie? I can’t find a definitive answer because even computer experts are still trying to figure this out. I will not claim to have any insider knowledge. But I wonder if this is happening because Chat GPT, by its nature, is resistant to saying three of the most important words in the English language:

I. Don’t. Know.

In this case, for example, it has access to vast amounts of legal cases. But none were really relevant to the case. So it predicted what a well-read lawyer might cite and made up cases that might exist relevant to this legal dispute. It made a prediction, based on its knowledge, of what the case law should be. Only the case law wasn’t that way because it exists in real space, not in probability space.

This is just me spitballing and maybe an expert can tell me in the comments why I’m wrong. But I throw this story out there for three reasons. First, to note that a lot of what we’re calling AI is not, in fact, AI in any real sense. Second, to remind everyone that we are still in the early days of AI and not even its creators understand what it’s doing.

But third, to say that I agree with the critics who want this technology to always be under human supervision and control. Because, in the end, as the Doctor once said:

The trouble with computers, of course, is that they’re very sophisticated idiots. They do exactly what you tell them at amazing speed, even if you order them to kill you. So if you do happen to change your mind, it’s very difficult to stop them obeying the original order, but… not impossible.

AI, and related technologies like Chat GPT, have a lot to offer us. But let’s make sure we can always pull the plug.

And, for God’s sake, don’t let them do your research for you.

[ThTh2] In a past throughput, I wrote about a promising vaccine against pancreatic cancer. This week brings news of a vaccine against brain cancer that is doubling survival time. This is another particularly cruel cancer so let’s hope further testing bears out these results.

[ThTh3] For the first time in decades, a new nuclear power plant is coming online. However, I don’t think this model is sustainable. The plant took 14 years to build and cost overruns pushed the cost to over $20 billion. Hopefully, the new design reactors can increase our nuclear capacity quicker and at lower cost.

[ThTh4] I like LEGO. And I like space. So what could be better than. LEGO. In. Spaaaaaace.

[ThTh5] So what’s JWST been up to lately? Oh, nothing much. Just transforming our understanding of the early universe. We’re finding that galaxies built up much faster and much more dramatically than we’d theorized. A whole bunch of work needs to be done so that the theories can catch up to the observations. This is why we built the thing.

[ThTh6] A few “bombshells” have dropped lately, claiming that they have proof that COVID-19 originated in a lab. Virologists Dr. Angela Rasmususen tears into these articles pointing out that most of this information is not new or is being misrepresented. The most recent claim is that the first people infected were Wuhan Institute scientists but, at present, this information is unverified.

[ThTh7] Virgin birth in a crocodile? Isn’t that one of the signs of the apocalypse?

An LLM predicts the most likely next token, given the previous n tokens of context (initially the prompt, and then the text it already generated) and based on what it saw in the training data. It’s not that it chooses to make up fake citations or factoids. It’s just that it gets to a point in the generated text where a citation seems likely to occur, and then it chains together a plausible-sounding citation given the context.

I’ve actually gotten ChatGPT to give valid citations in the past, e.g. here’s the first paragraph when I ask it about the paper that claimed vaccines cause autism:

Buuurn!

At first I thought this was gibberish, but that actually was the title. I think the key is that it has to be a paper that’s talked about a lot in contexts similar to the one you provide.

I’ve gotten valid citations for important papers in more niche topics, as well, so I think it’s the context-relevant prominence of the paper that matters, not the absolute prominence.Report

That’s a really good way of putting it, yes.Report

Can we keep the teenage-Earth creationism out of the science threads?Report

LOl. What a typo.Report

On second thought, a planet that took 14 years to create may be much, much older than 14 years.Report

ThTh3: In the past, I have referenced the UAMPS small modular reactor project licensed to be built at the Idaho National Lab. A key claim for the project was the $58/MWh levelized cost for the electricity it would produce. Earlier this year a more complete estimate of the construction costs was finished, and the levelized cost estimate was increased to $119/MWh. Participating utilities would only have to pay $89/MWh, because the Inflation Reduction Act provides for a $30/MWh subsidy. As a consequence of the higher estimate, the development agreement for the project has been revised. If the estimate increases again by the end of 2023, or the manufacturer and DOE fail to find sufficient additional participants by then, the UAMPS group can bail on the project and receive 100% reimbursement of any money they have already spent. The bail-out provision is convenient — my prediction all along has been that the project would bankrupt the UAMPS members, none of which are very large.

At least regionally, with the same $30/MWh subsidy, some wind and solar PV in particularly favorable locations would have a levelized cost of $0/MWh.Report

This is not an R&D funding model I’ve ever seen before. Am I missing something, is this a one-off, is this the future, where the government absorbs 100% of the risk?Report

Sorry, I wasn’t clear — NuScale, the developer of the tech, would be on the hook for reimbursing UAMPS.Report

And if the fission costs aren’t enough headache for nuclear advocates, Scientific American reports that the ITER fusion project has more problems. Unreleased internal documents apparently show a new 3+ year delay for first plasma, and unknown cost increases. Issues include fabricated parts that don’t meet spec, welders whose certifications to work on nuclear projects were forged, and a work stoppage called by the French nuclear safety authorities who want to take another look at the radiation containment design.Report

ThTh3 – I think if you built a single car every 14 years it’d cost $20 billion.Report

ThTh1. I *AM* a lawyer and I’m mortified. I’ll admit to having not back-checked every case I’ve ever cited — shame on me — but when I’ve done that, I’ve taken citations from VERY reliable sources. Fellow California lawyers can attest to the universality and reliability of the Rutter Guides, and the Witkin Legal Encyclopedia.

These yahoos asked ChatGPT for a brief, and filed it. seemingly having barely read it. Malpractice seems too gentle a word for this, but the poverty of the English language is such that we’ll have to stipulate that this is as unthinkable as a catcher telling the batter what pitch is coming next.Report

ThTh1: So why would this predictive chatbot lie?

The chatbot’s primary function is to make stuff up. If you ask it to make an English paper for you, it’s not copying someone else’s work, it’s generating it. If you ask it to make a paper using the same tone and style of [person x], then it’s still generating it.

“Generating it” means “make stuff up”, i.e. “lie”.

So if you ask it to make a legal paper for submission, it had better be submitted to an English teacher and not a Judge.Report