Tech Tuesday: No Class Today Edition

Ik T from Kanagawa, Japan, CC BY 2.0

I’m between quarters, and my last day of work is next week, so I have a wee bit of free time for a long overdue Tech Tuesday!

TT1: First up, Helion. Fusion startup, novel idea, but what caught my eye is that they aren’t making electricity by heating a working fluid and using it to spin a generator somewhere. They are using the reaction to leverage Faraday’s Law to induce current directly in conductors. No working fluid, just straight up pulsing massive magnetic fields to force electrons to move in conductors and induce current. I have no idea if other start-ups are looking at a similar path to produce electricity, but it’s the first time I’ve read about it.

TT2: Quantum Batteries. Sounds like something out of a Sci-Fi show. Here’s what makes it sound like someone trying to sell you a device to make your car run on water – theoretically, the bigger the batter size, the faster it can charge. Something to do with a property called ‘Superabsorption’. Been a long time since I took Modern Physics, so I’ll admit, I’d have to crack open a new physics text book to understand what that means.

TT3: Sticking with energy, we’ve known for a long time that Aluminum loves itself some Oxygen atoms, and when bare Aluminum is exposed to moisture, the surface will immediately begin forming an oxide coating, stripping the Oxygen from the water molecules and releasing the Hydrogen to the atmosphere. Once the coating has fully covered the bare surface, the reaction stops. We leveraged this on the LCACs, keeping most of the craft unpainted and trusting to the oxide layer to halt corrosion (we still rinsed them off after every mission because salt water will still eat away at the metal). All this to say, Aluminum is a great way to create Hydrogen from water, because you don’t need to input electricity. Of course, you only get a tiny bit of Hydrogen before the oxide layer shuts everything down. Unless you add Gallium. Gallium will strip away the oxide layer, exposing new Aluminum molecules eager to cut in on the Hydrogen/Oxygen dance. And the Gallium is recoverable. So is the Aluminum Oxide, which has many other industrial uses.

TT4: Using AI to improve the grow efficiency of algae ponds used for bio-fuels. The name of the game is minimizing mutual shading. Algae needs sunlight, and as the algae cells grow, they crowd out smaller cells. Making sure they all get enough sunlight maximizes the cell size at harvest time. Early estimates make it competitive with corn as a feedstock for bio-fuels, and the algae doesn’t need the energy intensive post-treatment and fermentation corn does.

TT5: A new, flexible, Seebeck generator (thermo-electric generators, or TEGs) could be wrapped around exhaust or other waste heat pipes to capture some of that wasted energy. Capturing the heat that is necessarily wasted as part of any industrial process is nothing new. Navy tin cans have waste heat boilers in the gas turbine exhaust stacks to provide hot water for showers and cooking/dishes/etc. Seebeck generators are nothing new either, but they are typically flat and rigid, so they could not be wrapped around curved surfaces. Like pipes, that are round, and bend this way and that way. Being able to wrap those pipes in TEGs would improve system efficiency a notable amount.

TT6: We all know about alkaline batteries, Nickle-Cadmium (NiCad), Nickle Metal Hydride (NiMH), Lead Acid, and Lithium Ion (Li-ion), but how about Sulfur-Sodium? It’s not a leap in battery storage capacity or charge rate, but it would be a leap in reducing the cost of such batteries.

TT7: Shift a bit to climate and decarbonization. RMIT has worked out how to put an old trick to an industrial use. Using a tank of metal in a liquid state at about 120 C, they bubble CO2 through the metal. As the bubbles ascend, the metal acts as a catalyst to strip off the Carbon atoms, which then form into flakes of pure Carbon and sink to the bottom, while the Oxygen bubbles off.

TT8: While CO2 and global warming is the environmental crisis du jour, it is critical that we not allow governments and policy makers to blame it for other environmental disasters that have much more to do with other bad policy decisions (e.g. aggressive forest fire suppression, or draining wetlands, etc.).

TT9: Plastics with sugar as a feedstock. SWEET! Kidding aside, plastics need a hydrocarbon as a feedstock, and sugar is a hydrocarbon.

TT10: Nuclear Salt Water Rockets. NOT for use inside atmospheres.

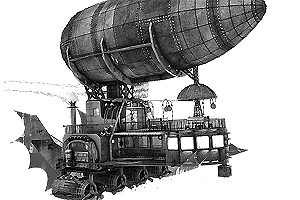

TT11: I guess I’m into the offbeat stuff, so… It’s a bird?! It’s a plane?! Yes?

TT12: Will your next drone look like this?

TT13: This looks remarkably scalable. I wonder what it would sound like on sonar? How fast can it go? Will it be part of the winter Olympics?

TT14: The incredible Fig. And here I thought they were just yummy in cookies.

TT15: The death of Moore’s Law, and why that is probably a good thing.

Bird-plane and cuttle-sub we’re leaving our brutalist phase and entering our gothic tech phase.Report

I’d say we are going back to our gothic tech phase. Just watch some of those early flight attempt movies…

What’s interesting to me is that way back when, we looked to nature to see how it solved problems like flight, realized we didn’t have the tech to copy nature, and figured out what we could do with the tech we had. Now our tech has advanced to the point we can start looking back to nature.Report

My future HPS dissertation is on seminal designs by-passed by brute force technology only to be surpassed by future designs based the seminal theories.Report

From TT15: “The problem with Moore’s Law in 2022 is that the size of a transistor is now so small that there just isn’t much more we can do to make them smaller.”

I want to say that I remember reading something exactly like this in 2007 talking about how we’re going to start stalling in 2012 or so.

I do appreciate that this time it’s different.

Maybe we could finally get software devs to clean up their code…Report

As someone currently taking a software engineering course, first we have to get CS programs to teach what it means to code clean.Report

Unfortunately, that generally requires experience to understand.

Generally experience fixing someone else’s mess.

To get there, you first have to learn to code, in which most students won’t be focused on (nor would really internalize) clean code — it’s hard enough to get them to write comments, and you can actually trivially grade that — instead focused on trying to understand the underlying principles.

Of course, my definition of “clean code” might not be identical to yours — I’ve come to believe, over the years, that code should not be viewed purely in terms of processor optimization — but on maintainability and future extensibility.

I’ll happily take a hit on an algorithm and add a few extra processor cycles to complete (nothing I make even comes CLOSE to the limits of modern hardware, so we have processor and memory to burn) if I can make that algorithm more readable and adjustable in the future.

I do this because I’ve had to fix someone’s incredibly tight, well written, pointer-arithmetic heavy, highly optimized nested array setup. It worked well and blindingly quick — right up until you needed to extend it, in which case it all broke down and two of us had to spend a week sorting out exactly how the thing worked. There were, of course, only a handful of incredibly useless comments and the less said about the variable names the better.

We replaced it with something that ran 10% slower, but where the internal array elements could be easily modified in the future.

A real rock star type wrote it originally — it really was the clever sort of thing you’d find in something pushing the edge of hardware (and maybe, it being 20 years old, that was the case then). It was replaced by two decent coders with an eye towards letting anyone with a clue use it and amend it later.Report

The Clean Code I know is what Robert Martin lays out in his book, “Clean Code”. One of the things I take to heart is his admonishment to stop trying to do the compiler’s job. Write code for humans to read, and let the compiler, if it’s worth half a damn, do all the machine level optimization.

My current migraine inducing code (I’ve mentioned it before) is a mess of 2000 line functions/methods that do all kinds of different things (violating the key “Do One Thing”), with variables named like character limits were still a thing to worry about. And of course, comments that make me wonder if the author was getting docked for each character of comment.Report

“One of the things I take to heart is his admonishment to stop trying to do the compiler’s job. Write code for humans to read, and let the compiler, if it’s worth half a damn, do all the machine level optimization.”

heck yeah. I am 100% on board with that.

Of course, I deal with legacy code. It’s a rare day I get the time and go-ahead to refactor something, not when there’s always new features and abilities to add and actual bugs to fix.

I’ve got a convoluted section of code I’ve got my eye on right now. it’d bluntly be more readable, more extensible, and easier to maintain if we replaced what we have with a simple giant switch statement on our major “cases” and just defined what was, and what wasn’t, enabled for each case in that sub-section.

Case A: Option A yes, option B no, option C yes, Option C1 yes C2 yes

Case B: Option A no, Option B yes, Option C no, C1-C2 no…

Instead we have these decision setups that made sense when we had 10 cases where they all fell into one of three rules. But over 15 years? 100 cases, which we shove into 6 separate “generic” rules, which are full of exemptions on a case by case basis….

Adding new cases ALWAYS breaks in that section and half the time it takes the debugger to figure out where options you defined as off get turned on again.

The only reason I haven’t fixed it? Haven’t been authorized the two weeks I’d need, minimum. (Two days to write it, two WEEKS to test it exhaustively) since we’re always time crunched on new features that our users pay for.

It won’t get rewritten until it breaks so badly it’d take LONGER than two weeks to sort out.Report

Clearly, you need to break it that badly…Report

One of the things I take to heart is his admonishment to stop trying to do the compiler’s job.

Do they still have to harp on that? I don’t keep a copy of Elements of Programming Style or the original Software Tools on my shelf any more, but even back in the 70s best practice was (1) make it work first then make it faster and (2) if it doesn’t run fast enough, think about algorithms and/or data structures, since the optimizer is only going to get you so much.

For a bit of time in 1978 I had the world’s fastest code for solving a particular narrow class of problem. Know how I got it? Took the box of cards from the previous world record holder and removed all of the stupid things they had done thinking it would speed things up. 10% fewer lines of code when I got done with that, 20% faster.Report

Algorithmic efficiency is taught a lot, and thus students tend to focus on it.

When what you really need to take away is how to read canned solutions for a “good enough” choice (ie: Which search or sort algorithm is useful here, from execution time to ease of integrating into the current code base, to further extension) and to glance at their own code and see “Oh yeah, I’m like….looping that inner bit 5000 times when 2/3rds of it only needs to be done once, let me move that for some easy gains.

Real world stuff is a little more complex. Right now I’ve got (AGAIN ON BACKBURNER DARNIT) an issue where years of laziness (“Just call the event for that control, that’ll sort out items A-Z correctly) has led to occasionally deeply nested event calls that are totally unnecessary.

Again, a lot of it comes down to experience. Degrees tend to teach you foundational computer science stuff, or really narrowly tailored coding stuff. Both turf you into software engineering with different biases that takes experience and mentorship to grind off.

(And then there’s the “self-taught” coders — I’ve seen absolute brilliance marred by a blindspots the size of a T-rex. Also, never let an engineer do anything with a database. They WILL turn it into a spreadsheet. A horribly, horribly inefficient spreadsheet. With a primary key that’s just a row number….)Report

My current rats nest was done by a self taught engineer who started in C code, then took his bad habits to C++ & Java. You read both his C++ & his Java and you can tell he learned all his bad habits in C. I get into discussions with my leadership about what we are doing with that tool (because it’s a very effing useful tool and 3 of our biggest customers love it, but we can not keep supporting it as is), and they constantly get hung up on trying to understand the code. I have to pull them back and remind them that there is no understanding the code, there is only understanding what the code was trying to do, and replicating that (we know how the algorithms work, we have that documentation – it’s just his implementation of those algorithms that is a nightmare). They keep thinking there is something useful in the code itself – sunk cost fallacy? There isn’t anything useful in there except stark lessons in what not to do.Report

One of the projects I support, which I have lobbied EXTENSIVELY to nuke from the ground up and called “XXXX 2”, was sloppily converted from C to C++ by someone who almost understood object oriented programming.

That “almost” resulted in some of the most brittle, badly written monstrosity I have ever encountered. Any critical bug I fix in that results in a dozen more bugs being fixed in JUST that subsystem, as it really only works along the pathways it’s primary user used.

What kills me — KILLS ME — is that the one bit that’s not object oriented is the internal data, which is done in multiple arrays which reference and depend on themselves in weird ways (clearly cut and pasted from the initial, pre-conversion code).

That internal data? it is the most clean and clear example of “This should be an object” because ALL this software does is load data from offline sources, and manipulate and display it in various ways. And it’s not.

it’s the old, memory-constrained nest of pointers to pointers to pointers in arrays where, god help me, I literally can’t remove or add items to some of the arrays without breaking EVERYTHING.

Worse yet? At least half the program is functions and abilities to handle data types and manipulations that were common 20+ years ago, but no longer used.

A modern version of this software would be simpler, more elegant, and have at most a third the functionality.

And bluntly I could slap it together in C# in maybe two months, needing only a poll of it’s few users to find out what features they actually use.

Add two weeks if I slap it together in C++ using the current GUI library (which is fantastic but offers no WYSIWYG editor which would vastly accelerate things)Report

Ain’t Technical Debt wonderful.Report

As we used to say about some, “They can write bad Fortran in any language.”

As a grad student I inherited 10,000 lines of Fortran developed on an IBM machine prior to the adoption of Fortran 77, and was paid to port it to a CDC machine. It was absolutely horrible Fortran.Report

Bless the people who do database design well, so that I don’t have to do it badly. Which I do.Report

PS The Book

https://www.amazon.com/gp/product/B001GSTOAM/ref=ppx_yo_dt_b_search_asin_title?ie=UTF8&psc=1Report

The application I work on is quite interesting. I’ve mentioned before, it is about 600k lines of Common Lisp and about 400k lines of C++. It is hyper optimized with massive amount of bit twiddling. We put nothing in an integer that can’t be packaged into a bit map. We avoid pointers and mallocs as much as we can. The redeeming grace is that Lisp lets us build macros for all of this, so in a sense we’re extending our “compiler” to let us write hyper optimized code.

This is all necessary — at least it was when the code was first written. The problem space is massive. The room for high level optimization is quite limited, as airlines did not design their pricing rules for ease of processing. We use as much data sharing and memoization as we practically can, along with a fair bit of tree pruning (with the ensuing loss of search space), but in the end our application lives or dies by its ability to process an exponentially growing mass of data as fast as it possibly can.

In practice, our design resembles the entity/component scheme used in video game engines: https://en.wikipedia.org/wiki/Entity_component_system

This is decidedly _not_ object oriented, but instead it focuses on data locality and cache performance, even at the cost of “nice clean code” schemes from the OO/FP world. For video games, this makes a visible difference. The more polygons you can pump out per second, the better the game feel you will get — at least in general. It is similar for our application.

“10% slower is fine” is definitely not true for vidya, nor would it work for our application. For us, a 10% speedup means more and better solutions provided to our customers — and in reality we’re looking at more like a 50% speedup across the board. After all, if you are processing 100k entities, getting a high rate of cache hits versus cache misses is an enormous speedup. It’s very non-trivial.Report

I have always found your descriptions of the optimization problems fascinating, and except for (a) a spouse with progressive dementia, (b) granddaughters, and (c) depending on the ability to work from where I am and never have to fly, I’d probably be applying (whether you have current openings or not).Report

Are they teaching heavy parallelization these days? Clearly, that’s where performance increases are going to have to come from.Report

Not early on, they aren’t. I’ve got one more dev class before this short program is done, so maybe we will touch on it.

But I agree, parallel processing is critical. Sadly, I’m not sure how early the concept of multi-threading is getting touched on, outside of managing GUI/System interactions. Moving into parallel processing, data analysis, and basic AI (Neural Nets, etc.) should probably start happening sooner.Report

Yeah, FinFET and other non-planar components let them keep going past 2012. More interesting is to watch manufacturers drop away. Only Intel, Samsung, and TSMC continue to push for smaller sizes. GlobalFoundries, one of the DOD’s “trusted” suppliers, was blunt about it, saying that they couldn’t afford the cost of R&D for fabs below 10 nm.

It’s more fun to watch how parallel processing is evolving for consumer devices. Mixes of both slow low-power cores and fast high-power cores. In addition to GPUs, there are starting to be specialized cores that run certain AI models efficiently. Apple’s shiny new M1 Ultra (technically two chips plus a pile of very high speed smart interconnect) has 20 CPU cores, 64 GPU cores, 32 neural net cores, dedicated video and image processing, plus up to 128 GB of unified memory allocated to processors as necessary. 114 billion transistors.Report

Related: https://www.youtube.com/watch?v=GVsUOuSjvcg&ab_channel=VeritasiumReport

The worst, biggest, sloppiest code is always the UI. We need to get users to appreciate the clean, simple logic of the command line.Report

One of the rules in the lab where I helped build one-off test systems was, “Assign Mike the trickiest of the real-time parts, but don’t let him anywhere near the UI.”Report