Morning Ed: Society

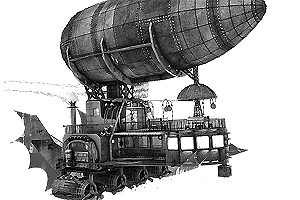

[So1] This wins.

[So2] How brewing became a boys’ club when women owned the ground floor. Coffee, on the other hand, women were not as fond of.

[So3] This is really interesting: They’re building “memory towns” for people with dementia, and it has ramifications for the rest of us.

[So4] History is bad. Science says so.

[So5] This is sort of a back alley argument for lightened copyright restrictions. A lot of this stuff they don’t care enough to offer, but also don’t want to give away. We can make the choice for them!

[So6] Derek Thompson writes about the effect of meritocracy on sports. The arguments about parental involvement are good, though “If it’s not a traveling league why bother” says more about a defect in our culture more general than inequality.

[So7] The last line of a couple dozen shows. The Savage shows, Wonder Years and Boy Meets World, got the most mileage out of theirs. The sad ones are the “What now?” ones.

[So8] I know I say this about a lot of things, but isn’t there a middle ground here? Garfield was designed around merchandising and it shows, but Calvin and Hobbes gear would have bought joy to people and instead of that we have pissing Calvin as the primary cultural artifact. More on Calvin and Hobbes.

[So9] I just don’t think you’ll ever do better than Corporate Merger Jesus.

[So0]

More people should know the great story behind the history of nachos. pic.twitter.com/2ECKAyABdz

— ATTN: (@attn) September 27, 2018

So3: I remember reading about the first of these, I think it was in Holland. But my understanding was that the idea there was that it was a RESIDENTIAL center as well as a hangout, so people needing memory care could move there and live there – and not have to be driven and dropped off, as they suggest in the article about the commodified US version of it.

I dunno. Part of me goes, “Maybe this is a way of using all those dead malls for something useful,” part of me cringes a little bit at the idea that it’s a place to warehouse people/that it’s probably expensive for people to use, given how this kind of thing goes in the US.

I have aging parents (in their 80s). At this point they are both mentally fine and one is physically capable, but I shudder to think if that were to change. I’m not sure what I would do if they needed round the clock care; I live 700 miles away and have a career where I can’t easily take an indefinite leave of absence. I know that lots of nursing homes are horror-shows and even the good ones aren’t great places to be…

I also worry for myself: no spouse, no kids, and while my health is good now and I come from long-lived stock that tends to stay able to care for themselves until they drop dead, still, I worry. (My university recently terminated its long-term-care insurance policy thing, and you can’t pay into one – at least not reasonably – as an independent individual)Report

So2: Here is a related story from 2017 about the Jewish involvement in the brewing industry.

https://www.tabletmag.com/jewish-arts-and-culture/244365/oktoberfest-was-invented-by-jews

Jews were forbidden to brew beer after the Beer Purity Law was passed but many made their living growing hops because small time gardening was one of the few jobs available to them. After the Jews of Germany received emancipation in 1868, many went into the brewing business and modernized the industry.Report

So1: You’ve hit my sweet zone with this one. The linked article’s conclusion is correct, but manages to get a lot wrong along the way, starting with his characterization of the people arguing that “data” must be plural:

If we unpack this, the underlying assumption is that there is a “correct English” that is entirely divorced from how actual English speakers communicate. (Or, to be pedantic, narrow this to adult native English speakers without any neurological issues that affect their speech.)

What is this idealized correct English? How is it identified? Since it by definition we can’t look to actual English, it could be anything. In practice, the answer is that a small body of self-selected blowhards writing usage manuals and the like throw out ideas among themselves. Most die a quiet death, but a few stick. The self-selected blowhards achieve a consensus among themselves, and then pitch their usage dictum to the world at large. This appeals to two groups: people who enjoy bullying others, and people who are insecure and allow themselves to be bullied. In practice both groups are small enough that the effect on actual English is small. Despite this, the really persistent usage dicta can hang around for centuries.

An additional assumption of the “data” debate is that a word borrowed from another language must retain its grammatical characteristics from the parent language. This assumption is almost always left discreetly unstated, as it is stupid on its face. Borrowed words make grammatical shifts along the way all the time. The “date is plural” claim is an unprincipled ad hoc argument that collapses under the most modest scrutiny.Report

Would you allow a code language to follow to develop as English develops, by people ignoring self-selected blowhards, and coding anyway they wanted? After all, who wants to be bullied about the correct way to write FORTRAN or C++ code?

Most languages have a mechanism to codify usage changes that is both prescriptive and normative. As the language evolves, the changes are codified, and the old usages are dropped out. And we lucky speakers and readers of most languages do not have any kind of crisis growing up on how to relate a written word with a spoken word, with extra or missing letters in it.Report

No, but then again a code language and a natural language serve entirely different purposes, so even as a rhetorical question this seems off point. To put it another way, the word “language” in “code language” is a metaphor. It is a useful metaphor, but only up to a point. Suggesting that the needs of a code language somehow dictate the needs of a natural language blows right past that point.

This is utter nonsense. The vast majority of languages are not codified at all, and have nothing normative about them. If we are lucky, some plucky field linguist has spent a year or so living in the jungle with the speakers, and will describe the language to his colleagues once he gets back to his academic institution. L’Academie francaise is the exception, wildly outside the norm.Report

I stand corrected. I meant languages spoken by large numbers of people. This includes Spanish (second most extended native language, with 480 million speakers), Hindi (codified in the 1950s in both grammar and spelling ), Arabic (Modern Standard Arabic, which is mandated to be taught in six countries, with 240 million speakers), Turkik languages (180 million) French, as you already mentioned (150 million native speakers), etc.

And of course, basic Chinese ideograms (at least in the PRC) have been standardized since the 1960s.

But you are absolutely right – most languages are not codified.

Yet, the languages most people speak, are.

English, not the Académie française, is the outlierReport

Even among the major world languages it isn’t that straightforward. Yes, there are the Académie française, the Real Academia Española, and so forth. But they are considerably less influential of actual usage than they would like. Ordinary speakers and writers do their own thing, with the academy tut-tutting.

Modern Standard Arabic is a different sort of creature. Yes, it is taught widely. But it isn’t taught in the sense that an American student might be (though probably not nowadays) taught English grammar. It is essentially a foreign language. Modern Arabic isn’t a single language. It is a collection of languages derived from Classical Arabic, in the same way that the Romance languages are derived from Latin. They are not mutually intelligible, at least across the geographical range. It may be that each is mutually intelligible with its neighbor on either side. I don’t know one way or the other. This is how the Romance languages worked, particularly along the Mediterranean coastline. There was a chain of dialects running from Sicily to to Lombardy, across southern France, and down the Spanish coast to Gibraltar. Each was mutually intelligible with its neighbors, but not anything like along the entire chain. This is still somewhat true, but modern national boundaries have been fixed long enough to influence linguistic development and there has been some consolidation of dialects.

So getting back to Modern Standard Arabic, it is an artificial updating of Classical Arabic, widely used as a second language. My understanding is that essentially no one speaks it as a first language. It would be as if Italian scholars had in the 19th century devised an updated version of Latin influenced by later developments, and pitched this Modern Standard Latin as a second language to be used throughout Italy. (What actually happened was the Tuscan dialect was adopted as Standard Italian. Why Tuscan? The flip, but not entirely wrong, answer is because Dante Alighieri.) Modern Standard Arabic is an interesting linguistic phenomenon, but not reflective of common usage.Report

Would you allow a code language to follow to develop as English develops, by people ignoring self-selected blowhards, and coding anyway they wanted?

Perl.Report

@richard_hershberger

My case for using “data is” is that in contemporary English, data is a mass noun, like water or sand. Data is no longer a set of individual facts that can be considered in isolation but a mass of information that is treated as a unit, whether by summary statistics modelling or graphs. You can tell by the way that the singular “datum” has almost entirely disappeared from contemporary English (the only time I hear people use “datum” is when they are talking about map projections).Report

So4: This mostly illustrates why I haven’t read Salon regularly in years. It is startling, looking at what it is today, to recall that there was a time when it was relevant.Report

“On accident” makes my wife as crazy today as it did when our kids brought it home from school 25 years ago.Report

So8: Excellent article. Thank you for bringing it to my attention. To answer your question, yes, there is a middle ground. You can buy a plush Bill the Cat, but the merchandising is narrowly limited.

The important point that the article misses is of over-longevity. Part of why Calvin & Hobbes holds up so well is that Watterson quit while still at the top of his game. He had said what he had to say, so he stopped talking. Peanuts ran for about fifty years. Frankly, it would have been better were it half that. A comic strip simply can’t stay good for that long. The present-day comics page is full of zombie strips: Comics that were shopworn when I was a kid, now written by the next generation. How long can a strip remain relevant? Doonesbury hasn’t been since the Reagan administration at best. Dilbert spoke truth in the ’90s. Now it is parody of itself. Peanuts held up better than most, but the loss of its edge wasn’t merely due to merchandising watering it down. It had simply gone on too damn long, and no longer had anything to say.

There are no truly relevant newspaper comics today. Those are published on the web nowadays. But I wonder about the direction of causation. I suspect that there are any number of interesting young artists who would be thrilled to be on the funnies page, but those are complete ossified. There is some newspaper subscriber who adores Beetle Bailey and will complain if his local newspaper drops it in favor of something good. Better not to risk him cancelling his subscription. The modern newspaper comics’ irrelevance is self-inflicted.Report

There was a comic in my hometown local paper, I’ll call it “Andrew” (it had a different name), whose characters were a couple of bald-headed little babies, the humour derived mostly from the characters doing babyish things and having a babyish understanding of the world but also being very articulate while discussing them. It was a comic that had clearly gone on too long.

It turned out my high school friend “Andrew” was the titular character, and his mom had started drawing the comics when Andrew and his brother were babies – but then she hadn’t had the characters age, so she couldn’t use much of what was actually happening in their lives as material.

Andrew was a bit of a hot mess for a while there, did some time in juvie for arson. We laughed at the time that would have been the perfect time to make a break from recycling 14 year old material and jump straight to current events. Anyway, Andrew got his stuff together and is well now.Report

There is even a town in Massachusetts called Alewife!

I really don’t get the posters of Jesus. Really don’t get them. I might as well be an alien anthropologist looking at them.Report

The art is banal to the point of parody. The message is a straightforward one: that God is present and supportive in all the facets of our lives. There is no task so challenging that God can’t guide us through it; there’s no task so mundane that God doesn’t care about it.Report

The 13th picture in that series is awesome – the driver is just realizing that he might be in real trouble in a second, and Jesus is way ahead of him and looking forward to the chaos like “Aw yeah, this is gonna be good!”Report

They seem very idolatrous for a Jewish standpoint. Then again, Rabbis kind of maintained that God is a bit more remote from humans than Christianity did. Maimonides suggested that gingerly that God doesn’t even really hear or care about human prayer but its something he has us do for expectations.Report

Which sort of illustrates the dilemma for contemporary Christians.

Illustrating Jesus in his context of 1st Century Galilee, of robes and stone buildings seems believable enough.

But transporting Jesus to 21st century America is like creating a realistic Gotham City. It attempts to show a supernatural event in a natural place.

There just isn’t any way an artist can place him in a contemporary setting without it seeming bizarrely incongruous. The results can only range from awkward to hilarious.

I’ve tried.

My thesis project in architecture school was a church. I made a painting of the altarpiece, of a Jesus clad in blue jeans on a cross of steel I beams. I got several comments from those who felt it was disrespectful.

The problem is a deeper one revolving around the nature of the faith.

The Gospels make it clear that Jesus was unremarkable looking, wholly unrecognizable as a divine being. He ate, sweated, bled and crapped like any ordinary Galliean of his time. No glowing halo, no thunderbolts. Heck, he wasn’t described as even being handsome.

Theologically, showing a 21st Century Jesus aglow with a divine light in robes contradicts that message. A correct image might show Jesus as a black guy in blue jeans, mingling with undocumented immigrants and drug addicts.

But that too, would be awkward since really, who wants to fall at the knees of some regular guy in a hairnet handing out loaves and fishes at the Midnight Mission?

The problem is, since almost the start, its been easier to worship the glorified Jesus than imitate the flesh and blood Jesus.Report

It varies a lot by era. The Greek tradition was to have Jesus or saints depicted typically alone, and very stylized. It was supposed to be God’s view. Small mouths, wide eyes, because the subject is looking at God’s majesty. A lot of Renaissance presentations of the Crucifixion are very naturalistic except for a glow from the face. I’ve never seen the need to race-lift Jesus, and it could have some ugly motivations, but quite often the artist is just depicting what he knows. A Northern European or Japanese artist may never have seen a Semitic-looking Jew.

Jesus lived a specific life in 1st century Judea. The depiction of Jesus in blue jeans wouldn’t make sense to me. I can understand the tension between depicting Jesus pre-Crucifixion versus glorified, but not a transference of Jesus’s narrative to a different era.

ETA – I think that the depictions in the link would make more sense with guardian angels. There would be far less juxtaposition (not the right word, but you know what I mean).Report

You mean something like this?

https://www.imgrumweb.com/post/BDUiEHFh5qz

I always liked this design, BTWReport

That’s a skinhead tattoo. It is supposed to represent how they get crucified by the press. Real working class England from ’69.Report

Oi!Report

I think you mean contemporary Evangelicals.

My friends who grew up Catholic or Mainline Protestant would roll their eyes at this stuff. I think it is Evangelicals who like seeing Jesus guide everything.Report

Mainline/liberal Protestant here (Disciples of Christ) and yeah, I find the paintings slightly odd and uncomfortable. I mean, yes, I often ask for “guidance and direction” but I wouldn’t have one of those paintings…I think the whole “Jesus in contemporary American setting” thing weirds me out a little.

I woulnd’t *say* anything to someone who had one of those (most mainline Protestants are also painfully polite) but I wouldn’t want to have one “watching over me” every day in my office. If someone takes comfort from it, fine, but not for me.Report

I agree that this art is more Evangelical than Catholic or Mainline Protestant. But I think it’s more an aesthetic rather than a theological difference.Report

I just want to quote this because I see this as one of the major problems in modern American Christianity. And I am a Christian myself. (Your response to the issue was better and clearer than mine)

It’s similar to the idea of “Love is something you DO, not something you FEEL.” I think we’ve gotten way too much into the “how do I FEEL” end of things rather than the “how is best for me to ACT” end of things.Report

Then there is the Homeless Jesus sculpture, which provoked complaints from residents who didn’t realize that it was a sculpture, much less of Jesus. Hilarity ensued: https://en.wikipedia.org/wiki/Homeless_JesusReport